Like all good predictive models, we need to take a look and assess the accuracy of our ESCplus predictions so we can go back and recalibrate if necessary. Let’s take a look at how well it fared for the 2019 season:

Semi-final 1 – May 14, 2019

This is somewhat of a disaster. We erroneously predicted the qualifiers: Portugal, Belgium and Hungary

And we missed: Belarus, Estonia and Serbia

Conclusion: I too wanted Conan to qualify for Portugal – but I’m guessing we have some inherent bias that favours our large readership in Portugal and Spain. I can chalk that up to skewness in the data as a result.

Belgium was always going to be close, but I think the tool favoured Eliot because the track performed so well and the live performance let us down.

Estonia is the one where I feel like there is an opportunity to dig into a bit further. We had Estonia very close to 10th place but the real issue was that we overrated Hungary’s chances – which was consistent with the bookies. I stand by my theory of the overestimating odds for returning artists.

The place I don’t have too much of an explanation is Serbia and why we were so far off there — it could be because Kruna just didn’t perform well as a video or audio track, but we should have been onto Nevena’s fierceness.

We did get Belarus wrong too – but let’s face it – how were we supposed to know about who would like it!

Semi-final 2 – May 16, 2019

A perfect 10 for 10 ! – This was a case where I doubted the model and imparted my own human wisdom and instinct which meant I settled for 7/10 on my bet with Jamie – but could have had a perfect score if I stuck with the pure algorithm.

Grand Final – May 18, 2019

To say the tool did its job by predicting the Netherlands win isn’t saying much. The Netherlands had been the odds favourite for most of the pre-season period and it was the safest bet for the final. Where it gets interesting is if we look at the top 10 and bottom 10 to see how good of an overall picture the tool was able to predict.

The top 10:

Even though the tool is not actually predicting the top 10, but rather the 10 most likely entries to win – we will take it as the former for sake of this article. And wow, we did well here – 9/10

So here – we have the big names – Italy, Sweden, Switzerland, the Netherlands — all to be expected. Where I thought the tool was particularly omniscient was in having Norway and North Macedonia in the top 10 as well.

The one place we missed in the top 10 would be Iceland where we had it in 13th and they finished 10th. Not bad at all.

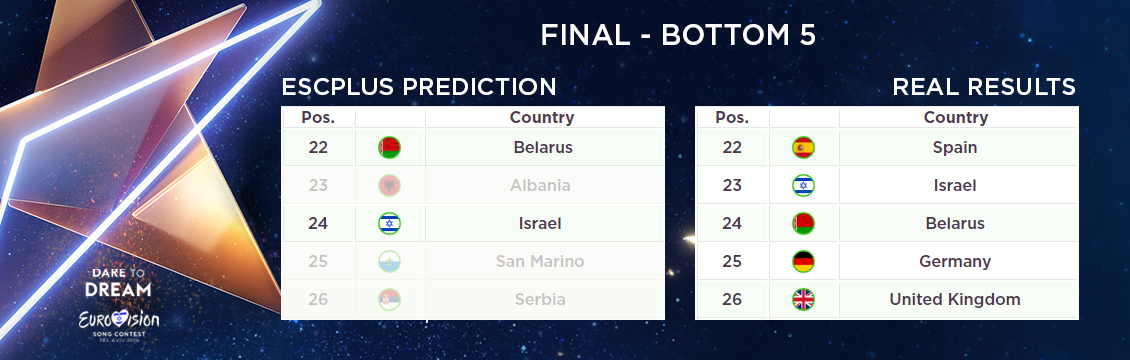

The bottom 5:

To test how well the tool performed in its entirety – let’s take a look at the opposite end of the spectrum. Were we forecasting the right bottom five?

We had:

- 26th – Serbia – actual finish 17th

- 25th – San Marino – actual finish 20th

- 24th – Israel – actual finish – 23rd

- 23rd – Albania – actual finish – 18th

- 22nd – Belarus – actual finish – 25th

So there’s some good correlation here – with the biggest miss being once again Serbia – and some really close predictions on Belarus and Israel. I’m satisfied that the highest placing of this group was Serbia in 17th. We may need to factor in for non-English language songs if you look at the miss on Albania and Serbia together – perhaps suggesting they are underrated in the recorded versions. In this grouping, we captured 2/5

On the flip side, we did not predict the dismal overall result for the UK – and we should have.

Did you have fun following the prediction updates ? Any suggestions? Let us know in the comments.